Do you feel awkward talking to the computer? How about in front of other people? Voice control is in its infancy really. Speech recognition is getting better. By utilising cloud-based services the big players are able to parse speech and recognise what is being said very well. Certainly your mobile can understand phrases like “navigate to work” or “call Aunt Jemima“. Well it can if you use Google Voice or Siri; Samsung’s Bixby assistant still can’t understand English.

Skills and vocabulary

Far Side Cartoon (c) Gary Larson

Sadly your digital assistant does not really understand you! It has a limited instruction set which you learn how to use. Your device has a vocabulary of keywords such as “call” and “install”, if you go beyond that limited range there’s no understanding. Sure the designers are clever, with the cute

Easter eggs built into their systems. Try asking Siri “what’s your favourite colour?” or “what are you wearing?” and you get a clever canned response. The key word is canned, the system does not

understand speech.

This shortcoming is the next step that the companies need to fix. The key is to recruit external developers. There are parallels with the iPhone. When it launched in 2007 you could only run the applications Apple shipped. The App Store was announced the following year and now hosts millions of apps.

Amazon are following a similar path with Alexa. Third party developers can create their own ‘skills’ for Alexa. As this Wired article states:

they recently reached the milestone of 10,000 skills. This is an increase from 135 skills in 2015.

Skills are the element which can propel voice control from the limited state we have today to something truly useful. Accurate speech recognition plus the utility of skills will give us something that is a real breakthrough technology.

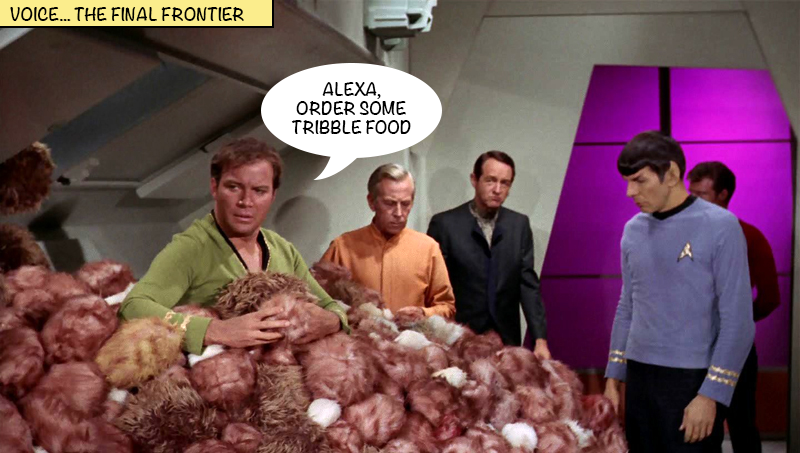

The Wired article quotes Amazon “We had this inspiration of the Star Trek computer,” says Steve Rabuchin, who heads up Alexa voice services and skills at Amazon. “What would it be like if we could create a voice assistant out of the cloud that you could just talk to naturally, that could control things around you, that could do things for you, that could get you information?”

Maybe Star Trek is the future after all.

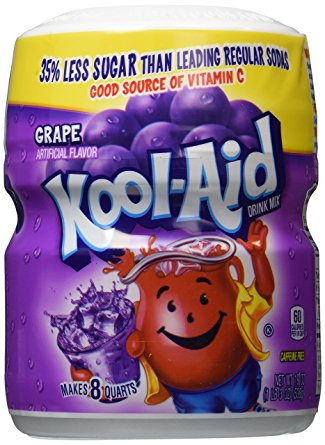

Our American friends have a lovely expression: “Drinking the Kool-Aid”. If you’re not American (and most of us aren’t) this isn’t always easy to understand. Kool-Aid is a relatively cheap powdered soft drink. The phrase refers to the 1978 Jonestown deaths of followers of the People’s Temple in a murder/suicide where the drink was mixed with poison.

Our American friends have a lovely expression: “Drinking the Kool-Aid”. If you’re not American (and most of us aren’t) this isn’t always easy to understand. Kool-Aid is a relatively cheap powdered soft drink. The phrase refers to the 1978 Jonestown deaths of followers of the People’s Temple in a murder/suicide where the drink was mixed with poison.